Author Ebrar Eke

WHAT IS TEXT TO IMAGE?

Text-to-image models are an exciting breakthrough for architects and designers. These models use deep neural networks to generate images that match natural language descriptions. They work by combining a language model that transforms input text (prompt) into a latent representation with a generative image model that produces an image based on that representation. The best models are trained on massive amounts of image and text data scraped from the web, allowing them to produce outputs that approach the quality of real photographs and human-drawn art.

Another approach involves training a model on a large set of images, each captioned with a short text description. The model then learns how to convert pure noise into a coherent image from any caption. This technology provides architects with a powerful tool for visualizing designs and communicating their ideas to clients.

TEXT - TO - IMAGE TOOLS

MIDJOURNEY

Midjourney is an independent research lab based in San Francisco that explores new mediums of thought and seeks to expand the imaginative powers of the human species. The company was founded by David Holz, and its mission is to create innovative technologies that inspire and empower people to think creatively and explore new ideas.

One of Midjourney's most notable projects is an artificial intelligence program called Midjourney AI, which is an interactive chatbot that generates images from natural language descriptions, or "prompts". Architects, designers, and other creatives can send commands and requests to the AI via the popular platform Discord, and it will produce images based on their inputs. This service is designed to help people visualize their ideas and bring their projects to life in a new, innovative way.

Overall, Midjourney is focused on pushing the boundaries of what's possible in the realm of creative expression and intellectual exploration. The company is dedicated to using technology to enhance human potential and help people think more creatively and expansively.

MIDJOURNEY

MIDJOURNEY Magazine

MIDJOURNEY Multi Prompting

DALL-E

DALL-E is an artificial intelligence program developed by OpenAI that is capable of generating high-quality images from textual descriptions. Specifically, it is a neural network trained to generate images using a combination of natural language processing and computer vision techniques. The name "DALL-E" is a combination of the artist Salvador Dali and the character WALL-E from the eponymous Pixar movie.

What sets DALL-E apart from other image-generating AI systems is its ability to create unique and complex images from scratch, based on textual input. For example, given a description like "a green armchair in the shape of an avocado", DALL-E can produce a photorealistic image of the described object that looks like it could exist in the real world.

DALL-E's capabilities have potential applications in a variety of fields, including art, design, and advertising, as well as in the creation of virtual and augmented reality experience

DALL-E

STABLE DIFFUSION

Stable Diffusion is a machine learning algorithm developed by OpenAI that is used for natural language processing tasks such as language modeling, text classification, and question answering.

What sets Stable Diffusion apart from other machine learning algorithms is its ability to handle long-term dependencies in text, meaning it can understand the relationships between words and phrases that are separated by long distances in a sentence or paragraph. This makes it well-suited for complex natural language processing tasks, such as language translation or summarization.

Stable Diffusion is a highly flexible and adaptable algorithm that can be fine-tuned for a wide range of natural language processing tasks, making it a powerful tool for researchers, developers, and data scientists working in the field of artificial intelligence.

Stable Diffusion Online

Stable Diffusion Prompt Book

Install Stable Diffusion Locally

Pros & Cons of the Text-to-Image Tools

Pros of Midjourney | Pros of DALL-E | Pros of Stable Diffusion |

|---|---|---|

High-resolution images | High-quality images of close-up and clothing designs | High-quality images |

Aspect Ratio | Available as an API | Training your own dataset |

Multi Prompting | Prevent users from using it for bad intentions | Unlimited possibilities |

Other users' work is viewable | Multiple results | Exploring of different art styles |

--tile & --iw (image weight) functions | Inpainting | Open source |

/describe function | Outpainting | Free |

Cons of Midjourney | Cons of DALL-E | Cons of Stable Diffusion |

Only available via Discord | Bias in the Training Data | AI technology uncertain |

Privacy costs more money | Limited Resolution | AI art has possible risks because of unlimited possibilities |

No direct edits available | Difficulty in Handling 3D Structures | Local setup can be complex and requires high GPU |

Membership | Membership | No copyright projections worldwide as of now |

Midjourney DALL-E Stable Diffusion

Prompt:

Architectural photography of a mountain museum carved into a landscape on the hill, overlooking the canyon, it rests on the hillside and adapts to the topography and the terrain's morphology, open in all directions towards the surrounding natural landscape in Dolomites, huge windows, curve-linear forms undulating roof covered in vegetation, overlooking the canyon, shape allows access from the building terrace, creating an inner landscape and interlinks the spaces beneath the ground, design dissolves the boundary between the inside and the outside, nature and architecture intimately interconnected, Highly textured, Color Grading, DSLR, Ultra HD, Super Resolution, wide angle, Global Illumination, octane rendering, hyperrealism, high resolution, rule of thirds, volumetric lighting, shadows, misty, intricate detail, photorealistic, cinematic lighting, 4K, Nikon D750PROMPTING

WHAT IS PROMPTING AND HOW TO WRITE ONE?

Prompting is the newest and most extreme form of transfer learning, allowing users to leverage the power of pre-existing models to generate images that meet specific requirements. Each request for an image can be seen as a new task for the model, which has been trained on a vast amount of data. While this technology has democratized transfer learning, it is not always effortless. Writing effective prompts can require as much work as learning a new skill or hobby. Nonetheless, architects and designers can now create stunning visualizations with greater speed and accuracy than ever before.

Did you know you can actually make ChatGPT write your prompts in any format? In the following example, we will indicate the parameters and make ChatGPT write us image prompts.

ChatGPT Prompt:

Please create a table that breaks down an architectural photography composition into the following key elements, where each of these key elements is a column : Composition, Architecture, Camera Angle, Architect Style, Material, Camera Angle and Features, Textures, Detail, Color Palette, Lighting, Location, Time of Day, Mood, Render Engine,

Fill the table with 10 rows of data, where:

Composition = "Architectural Photography,"

After the creation of the table, you can ask to make each row as a sentence with the aspect ratio you wish.

"Please write each row as a comma-separated sentence. Append each sentence with —ar 16:9"

Inspired by @nickfloats

Example Midjourney Prompts Generated by the ChatGPT

Composition| Architecture | Camera Angle | Architect Style | Material |Camera Angle and Features | Textures | Detail| Color Palette | Lighting, Location | Time of Day | Mood | Render Engine

Architectural photography, Eye-level, Modern, Glass, Symmetrical composition, Smooth textures, Minimalist detail, Cool color palette, Natural lighting, New York City, Midday, Serene mood, V-Ray —ar 16:9

Architectural photography, High angle, Gothic, Stone, Leading lines composition, Rough textures, Ornate detail, Warm color palette, Dramatic lighting, London, Sunset, Mysterious mood, Lumion —ar 16:9

Architectural photography, Low angle, Art Deco, Concrete, Rule of thirds composition, Geometric textures, Intricate detail, Neutral color palette, Artificial lighting, Miami, Night, Vibrant mood, Enscape —ar 16:9

Architectural photography, Worm's-eye view, Postmodern, Steel, Asymmetrical composition, Metallic textures, Industrial detail, Monochromatic color palette, Soft lighting, Tokyo, Dawn, Futuristic mood, Blender —ar 16:9

Architectural photography, Bird's-eye view, Classical, Marble, Radial composition, Polished textures, Elaborate detail, Pastel color palette, Harsh lighting, Paris, Mid-afternoon, Elegant mood, 3ds Max —ar 16:9

Architectural photography, Eye-level, Bauhaus, Brick, Diagonal composition, Rough textures, Sparse detail, Bold color palette, Natural lighting, Berlin, Midday, Playful mood, KeyShot —ar 16:9

Architectural photography, Low angle, Brutalist, Concrete, Symmetrical composition, Raw textures, Minimalist detail, Monochromatic color palette, Harsh lighting, Beijing, Afternoon, Stark mood, Corona Renderer —ar 16:9

Architectural photography, High angle, Art Nouveau, Ceramic, Leading lines composition, Smooth textures, Ornate detail, Earthy color palette, Dramatic lighting, Barcelona, Sunset, Whimsical mood, OctaneRender —ar 16:9

Architectural photography, Worm's-eye view, International Style, Glass and steel, Rule of thirds composition, Reflective textures, Minimalist detail, Cool color palette, Soft lighting, Chicago, Night, Calm mood, Arnold Renderer —ar 16:9

Architectural photography, Eye-level, Gothic Revival, Stone, Symmetrical composition, Rough textures, Intricate detail, Warm color palette, Natural lighting, Edinburgh, Morning, Majestic mood, Redshift —ar 16:9

ChatGPT Generated Midjourney Prompts + Midjourney Results

TEXT - TO - 3D

POINT-E

OpenAI, has recently open-sourced Point-E, a new machine learning system that generates 3D objects from a text prompt. Instead of creating 3D objects in the traditional sense, Point-E generates point clouds, a set of data points in space that represents a 3D shape. Although this approach is more computationally efficient, it has its limitations in capturing an object’s fine-grained shape or texture. To overcome this limitation, the Point-E team trained a separate AI system to convert the point clouds to meshes. This new tool can produce 3D models in one to two minutes on a single Nvidia V100 GPU.

Point-E is a successor to Dall-E 2, which creates high-quality images from scratch. Point-e takes things one step further, turning images into 3D models. It works in two parts: first, by using a text-to-image AI to convert a text prompt into an image, and then using a second function to turn that image into a 3D model. Unlike a traditional 3D model, Point-E generates a point cloud, which then goes through a second step to convert it into a mesh. The end result is a 3D model that better resembles the shapes, molds, and edges of an object. Although Point-E generates a lower-quality image, it is still an impressive tool that expands the possibilities of text-to-image technology.

Seems interesting right? Check the link to try it out by yourself!

Point-e

IMAGE - TO - IMAGE

ControlNET

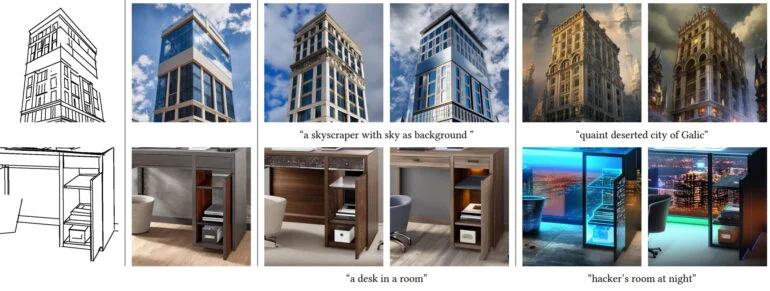

As architects, we understand the importance of having control over the creative process. ControlNet, a neural network model, offers us just that. This family of networks is based on Stable Diffusion, a powerful text-to-image generation model. However, ControlNet takes it a step further by adding a new layer of conditioning, which allows for more structural and artistic control over image generation.

Introduced in a research paper by Lvmin Zhang and Maneesh Agrawala, ControlNet is designed to learn very specific features related to the tasks it is being fine-tuned on. It can generate images from canny images, or even more complex ones like generating images from normal maps.

By training on a specific task, ControlNet can create more customized and unique images, allowing for greater artistic expression and control in the image generation process. Whether you are creating architectural models or visualizing interior designs, ControlNet can help bring your ideas to life with precision and creativity.

The authors fine-tune ControlNet to generate images from prompts and specific image structures. As such, ControlNet has two conditionings. ControlNet models have been fine-tuned to generate images from:

- Canny edge

- Hough line

- Scribble drawing

- HED edge

- Pose detections

- Segmentation maps

- Depth maps

- Cartoon line drawing

- Normal maps

These functions give us extra control over the images that we can generate.

Follow the link to try out the demo by yourself and watch the tutorial by Carlos Bannon to learn more!

ControlNET by Stable Diffusion

Digital Futures Tutorial by Carlos Bannon

VERAS

Veras® is an AI-powered visualization add-in for Revit®, that uses your 3d model geometry as a substrate for creativity and inspiration.

VERAS

TEXT - TO - VIDEO

Deforum by Stable Diffusion

Deforum is a tool to create animation videos with Stable Diffusion. All you need to provide the prompts and settings for the camera movements.

Deforum for AUTOMATIC111

How to make Deforum Video

Quick Guide to Deforum

Replicate Deforum Demo

VIDEO - TO - VIDEO

RUNWAY | GEN-1 & GEN-2

"The developers of Runway, a Web-based machine-learning-powered video editor, have unveiled Gen-1, a brand new video-to-video generative AI that allows its users to generate new videos out of existing ones using text prompts and images. According to the team, the newly-introduced AI is capable of applying the composition and style of an image or a text prompt to the structure of the source video, realistically and consistently synthesizing new videos as a result."

GEN-2

AI TOOLS WORKFLOW EXAMPLE

You can actually combine these tools and create a unique workflow of your own!

Midjourney + Image to 3D Mesh

ZoeDepth

Sketch to Render

Midjourney generated sketch image and ControlNet generated render result

Image-to-Image with ControlNet

Input Image Credits: Bauhaus-Universität Weimar

LIST OF AI TOOLS RESOURCES

AI Tools for AEC

RUNWAY ML

Stable Diffusion in Blender

Blender Dream Textures

NVIDIA CANVAS

Adobe Firefly